CATCH OF THE DAY: REFLECTIONS ON THE CHINESE SEIZURE OF A U.S. OCEAN GLIDER

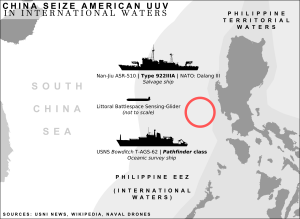

By Heiko Borchert On 15 December 2016, China seized an Ocean Glider , an unmanned underwater vehicle (UUV), used by the U.S. Navy to conduct oceanographic tasks in international waters about 50-100 nautical miles northwest of the Subic Bay port on the Philippines. Available information suggests that the glider had been deployed from USNS Bowditch and was captured by Chinese sailors that came alongside the glider and grabbed it “despite the radioed protest from the Bowditch that it was U.S. property in international waters,” as the Guardian reported. The U.S. has “ called upon China to return the UUV immediately.” On 17 December 2016 a spokesman of the Chinese Defense Ministry said China would return the UUV to the “United States in an appropriate manner.” Initial legal assessments by U.S. scholars like James Kraska and Paul Pedrozo suggest the capture is violating the law of the sea, as the unmanne...